"No Holding Back FileMaker Blogging"

|

Navigation:

|

Support this site by clicking on a sponsor below or becoming a patron!

FileMaker 20 Video Tutorials

Become a patron of this FREE web site!

|

|

Recent Blogs:

|

Currency Formatting

|

Gathering Portals

|

Multiple Choice Picker

|

Popups and Pickers

|

Window Locking

|

|

Meta-Consulting

Meta-consulting is a service Database Pros offers to help other developers

over programming hurdles via GoToMeeting. Email John Mark Osborne or call John Mark at (909) 393-4664 to find out more about this service.

|

The Philosophy of FileMaker recommends PCI!

|

Quick Tip:

|

Switch to Layout

When deploying a solution on FileMaker Server, the initial layout is the last one used when closing FileMaker in single-user mode. This could very well be a list view and slow down the opening of your remote solution. No problem, just set the correct layout with no records in the open script, right? Unfortunately, the initial layout loads even if you don't see it. The only way to switch layouts on open before FileMaker actually opens is to use the "switch to layout" option in File Options. |

|

|

Create a FileMaker Calendar

|

Fun Stuff:

|

FileMaker, Inc.

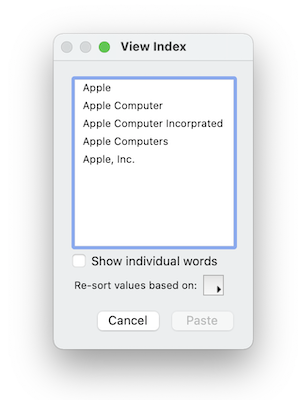

FileMaker, Inc. or FMI is a wholly owned subsidiary of Apple, Inc. It was once named Claris when it also sold MacWrite, MacPaint, Claris Emailer, ClarisWorks, etc. but changed it's name in 1998 when it focused on FileMaker. FileMaker started out as a DOS application called Nutshell but was shaped into the powerhouse it is today by Claris and FMI. |

|

|

Document Management Videos

|

RSS Feed

|

|

|

|

Data Parsing

|

Level: Intermediate

Version: FileMaker 17

Category: Calculations

Tuesday, June 26, 2018 |

|

|

|

|

|

|

|

|

Imagine receiving a file from a client, or your boss, with improperly delimited data. It's actually quite common. Just because someone can build a database, doesn't mean they can properly structure it. A common scenario is for the first and last name fields to be in the same column but you never know what you are going to encounter so it's best to be well versed with the text functions in FileMaker. In fact, data parsing can actually assist in creating features that weren't otherwise possible! That's why I consider data parsing one of the most important skill sets for database developers.

FYI: When I worked for the county government, they had an Oracle database which they wanted to import into FileMaker. I met with the Oracle developer, and to my dismay, found they were storing data on a single record which should have been stored on three records. This made my job significantly harder as I had to parse the data before moving it into FileMaker. Luckily, I was well versed in data parsing!

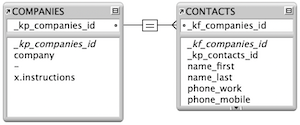

This Article

In the example for this article, first and last name will import into a single field as well as city, state and zip. Once the data has been imported, a variety of scripts will be employed to fix the data by separating the data into multiple fields. In a future article, parsing will be used to create features.

SIDETIP: The main reason first and last names are stored in separate fields is to facilitate sorting by last name. The same goes for city, state and zip.

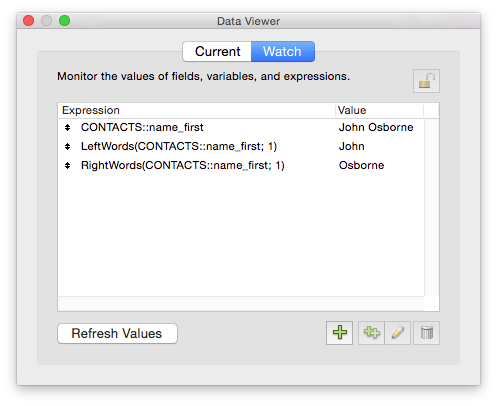

The Data Viewer

Once the data has been imported, it's best to experiment with your parsing formulas in the Data Viewer, rather than inefficiently running a script over and over again till the results are correct. The first parsing formulas to master are the ones using the word functions. Using the LeftWords function, the first name can easily be isolated in a field containing a full name. The last name can be parsed using the RightWords function.

SIDETIP: The Data Viewer allows you to walk through records to see how a calculation performs on different data.

The trouble comes when multiple word first or last names are encountered. Even data like middle names, titles and suffixes could be present. The question is... does your data contain any anomalies requiring more sophisticated formulas. You only want to program a calculation as complex as required. Check your data first then program!

For this example, let's assume that two word last names are a possibility but no other data surprises. The most common solution I see from developers is to perform a test on the data and provide two outcome results. In other words, a Case statement is employed to extract the last name:

Case(

WordCount(CUSTOMERS::name_first) = 3;

RightWords(CUSTOMERS::name_first; 2);

RightWords(CUSTOMERS::name_first; 1)

)

SIDETIP: When a function is nested inside another function, the calculation formulates from the inside out. In other words, the innermost function passes the result to the parameter of the function surrounding it.

While I love a good Case statement, I try to avoid them if I can since they can expand the size of your formula tremendously. In this situation, there is an easier solution using the WordCount function:

RightWords(

CUSTOMERS::name_first;

WordCount(CUSTOMERS::name_first) - 1

)

Both formulas are based on an analysis of the incoming data that determined that there are only one word first names and one or two word last names. If the data has one hiccup, such as a two word first name, it fails. While a formula to attempt to handle every scenario could be written, it will never truly handle every situation. It's best to familiarize yourself with as many techniques as possible in order to prepared for whatever data might come your way. Once you understand the data, you can prepare a customized formula to specifically deal with data at hand. No more and no less than needed is the key to success.

You can further simplify the function using the Let function (and, make it easier to read):

Let(

@Name = CUSTOMERS::name_first;

RightWords(@Name; WordCount(@Name) - 1)

)

The Let function also makes it easier to update with different fields when you move the formula to a new database. Instead of manually replacing every occurrence of the first name field, it's only necessary to replace the variable declaration at the beginning. It also helps when moving a formula into Manage Database since it doesn't require local fields to have the table occurrence specified.

SIDETIP: Understanding what separates a word is the key to getting the results you want from the LeftWords, MiddleWords and RightWords functions. While a period is not considered a word separator, a period and a space are. In addition, the period is not recognized as the end of a word and will be dropped if it is the last word being grabbed but retained if it is in-between two words. It is important to understand exactly how the algorithm works to achieve the desired result and not be surprised. Whenever using the Word functions always test against sample data to make ensure expected behavior.

Parsing Impossibilities

If you happen to receive a file where the first name, last name, middle name, title and suffix are contained in a single column, you have your work cut out for you. While it can be done, the job definitely becomes complicated. In this rare scenario, it's best to refer to an article containing calculations that already accomplish the task. The purpose of this article is to teach useful parsing skills so it won't cover this ridiculous scenario. Just in case, here's an article on parsing this complicated scenario:

Extracting Title, First Name, Middle Name, Last Name from a field in FileMaker Pro

Advanced Level Parsing

The problem with the word functions is their reliance on an algorithm with built-in definitions of what separates a word. If the algorithm works for the problem at hand, it makes parsing easy and you should use these functions. So, what happens if we introduce the slightly more complicated data of city, state and zip into a single field or column. The city could be one, two or three words, maybe even more. The zip code could be zip plus four. And, what about the state sitting in the middle of the city and zip code. How do you extract that with just word functions?

FYI: Words are the most common data to parse which is why the Word function exist. However, there are other parsing jobs that will require the location of other separators, including multiple character separators.

Let's start with the "postal_code" field to see if both zip and zip plus four can be extracted with the RightWords function. By testing this data, you will find the word functions don't consider a hyphen character as a word separator. In other words, a straightforward formula can do the job regardless of zip or zip plus four:

RightWords(

CUSTOMERS::name_first;

1

)

Of course, everything changes if some Canadian addresses get included with their space separated postal codes. You formula design always depends on the data at hand so always consider the possibilities. If there are no postal codes then don't figure it into your calculation construction. How to parse postal codes will be discussed later. Right now we need to establish some additional skills before attempting this advanced technique.

So... how to isolate the state and city data? The problem with the word functions is you are limited by the definition of the algorithm. While this makes the word functions easy to use, it also limits their flexibility. Rather than writing a complicated Case statement to support multiple word function results, it's better to use advanced parsing techniques. In order to grab the city, regardless of the number of words, use the following formula with the Position function nested inside the Left function. The position functions returns a value to the second parameter of the Left function, making The Left function dynamic instead of static:

Left(

CUSTOMERS::city;

Position(CUSTOMERS::city; ", "; 1; 1) - 1

)

Let's analyze this formula by starting with the nested Position function. The idea behind the Position function is to search a field or string starting from the left side. When it locates the search parameter, it returns the position of the search value as number of characters from beginning of the string or field. In this example, the Position function looks for the first comma (and space) and returns the location to the Left function. Since the desired location is actually the character before the comma, one is subtracted from the position.

FYI: The Position function contains four parameters. The first parameter is the target field or string to be searched. The second parameter is the search string. The third parameter is which character, starting from the left, to start searching. The fourth parameter specifies the occurrence of the search string to return.

In most cases, the last two parameters are statically set as a value of "1". However, any of the parameters in the Position function can be provided by nested functions, providing flexibility not offered by the word functions. No need to rely on the rigid algorithm of the word functions. By combining the Left and Position function, and possibly additional functions, you can define your own algorithm, parsing based on any break character you choose.

SIDETIP: When searching with a multiple characters, the result of the Position function is based on the beginning of the string. In other words, searching for "Mark" inside "John Mark" returns a 6 and not a 9.

Grabbing the state is a little more difficult but much better than trying to use the word functions within a long Case statement. Here is a parsing formula using the Middle function:Middle(

CUSTOMERS::city;

Position(CUSTOMERS::city; ", "; 1; 1) + 2;

Position(CUSTOMERS::city; " "; Position(CUSTOMERS::city; ", "; 1; 1) + 2; 1) - Position(CUSTOMERS::city; ", "; 1; 1) - 2

) While this complex formula looks terribly difficult to discern, once you have written formulas like this hundreds of times, it becomes natural to read. For now, it's a good idea to view the intricate combination of nested functions in order to later appreciate the Let function. The Position function in the second parameter of the Middle function determines the location of the comma and then adds two characters to get it to the beginning of the state. The third parameter does not provide the end location but the distance. By subtracting the start and the end, the length is returned to the Middle function.

Ok, I give in, here's the same formula with the Let function. Soooooo much easier to read with all that duplicative code declared at the beginning:

Let(

@Position = Position(CUSTOMERS::city; ", "; 1; 1)

Middle(

CUSTOMERS::city;

@Position + 2;

Position(CUSTOMERS::city; " "; @Position + 2; 1) - @Position - 2

)

)

Now, it's easier to see that the Position function locating the comma is used three times, showing how important it is to advanced parsing techniques. The interesting part is the last parameter in the Middle function which wants the number of characters to grab. You'd think it would ask for the end character since the second is the starting point. Regardless, it's not too difficult to make the Position function work if you remember your grade school mathematics. The end minus the start equals the distance. So, the comma searching Position function tells the space searching function to start looking for a space after the comma, guaranteeing it will locate the space after the state data.

Even though this combination of the Middle and Position function is extremely important to understand, this formula can actually be simplified by nesting the RightWords function inside the LeftWords function:

LeftWords(

RightWords(CUSTOMERS::city; 2);

1

)

First, the RightWords function grabs the two words from the right or the state and zip code. The result is returned to the LeftWords function which proceeds to grab the first word from the left. Tricky but very effective! And, again, it fails when considering a mixture of USA and Canadian zip and postal codes. Keep both formulas handy depending on the data that is dropped in your lap.

While the word functions provide a simpler solution to the problem of extracting the state, and should be used in the situation of all American zip codes, it doesn't discount the power of complex parsing with the Position function. Features created through advanced parsing techniques are strewn throughout the FileMaker world so it's a good idea to memorize the basics of complex text parsing. The best formula is to remember the first and last name example covered earlier. Given a one word first and last name stored in a single field, the following formulas will parse the data into separate fields:

Left(

CUSTOMERS::name_first;

Position(CUSTOMERS::name_first; " "; 1; 1) - 1

)

and

Right(

CUSTOMERS::name_first;

Length(CUSTOMERS::name_first) - Position(CUSTOMERS::name_first; " "; 1; 1)

)

The same basic distance formula is used for the last name as shown in the example above. The difference is the length of the entire field contents can be used as the ending point since it just contains two values. Subtracting the length from the position of the space returns the number of characters from the right. This is necessary since the Position and Right function work from two different sides of a field or string.

I don't often recommend memorizing formulas but this is a great exception. This core formula is the basis of all complex data parsing and should be committed to memory. When the time calls for a complex parsing routine, most formulas will stem from this foundational formula. Use simpler approaches whenever possible but keep this trick handy just in case you are presented with a more complex scenario.

FYI: The key difference between the word functions and the combination of text functions with the Position function is the ability to control the algorithm to meet your needs. You aren't always parsing words so having the ability to change the search parameters is invaluable.

Mixed Zips and Postal Codes

So, what about those lists with US and Canadian addresses? That space in the middle of the postal code will mess up the simple RightWords approach defined previously. By combining RightWords and the Position function, it's possible to come up with a fairly simple solution:

Let(

@PostalState = Right(CUSTOMERS::city; Length(CUSTOMERS::csz) - Position(CUSTOMERS::city; ", "; 1; 1));

RightWords(@PostalState; WordCount(@PostalState) - 1)

)

The Position function starts by locating the comma and then passes that result to the Right function, allowing it to grab the state and zip or postal code. Since the Position and Right functions start from different sides of the search field, it's necessary to subtract the length from the position to get the number of characters from the right to grab. Now you can step in with the RightWords and WordCount functions to grab either one or two words from the end. Pretty cool, aye!

SIDE TIP: It is possible to get the Position function to work from the right side of a field or text string. Just specify a starting point at the end of the field or string and enter a negative one for the the occurrence. Here's a simple example:

Position(

"John Mark Osborne";

" ";

Length("John Mark Osborne");

-1

)

The Parsing Script

Let's move the formulas from the Data Viewer to a script to parse all the imported data. Wrapping the parsing formulas inside a script can be done most easily with a Replace Field Contents step. A looping script could also be implemented but loops are slower in general. Since the first and last name are imported into the first name field and the city, state and zip are imported into the city field, the order of the Replace Field Contents steps are very important since the final steps erase the unwanted data from first name and city fields. Therefore, it's best to make a backup before running this script.

SIDETIP: The Replace Field Contents script causes record locking so this type of script is best run in single-user mode or when no other users are on the system.

Extraction

Let's change up this exercise a little and imagine a title has been added to the names being imported. Titles include "Mr.", "Mrs." and "Ms." and always appear at the beginning of the name. A simple formula like the following works well:

RightWords(

CUSTOMERS::name_first;

WordCount(CUSTOMERS::name_first) - 1

)

This formula works well and removes the unwanted title but always assumes there is a title. What if some records don't have a title and just begin with the first name? A different approach has an advantage that will help in this specific situation:

Substitute(

CUSTOMERS::name_first;

["Mr. "; ""];

["Mrs. "; ""];

["Ms. "; ""]

)

SIDETIP: The Substitute function is case sensitive. Only a few other functions differentiate between upper and lower case so it is important to take note.

The Substitute function works like a find and replace feature in a word processor, locating every occurrence of a string and replacing it with a new string. It has three parameters. The first parameter is the target field. The second parameter is the search string and the third parameter is the replacement string. As per usual, all parameters can reference nested functions instead of just simple field or string reference.

In this example, the Substitute uses square brackets to specify additional parameters to find and replace. As many parameters as necessary can be included, as long as you are willing to enter them. The search parameter also specifies a space within the quotes, not to make the search more precise, but to ensure no space is left at the beginning of the name when a title is removed.

FYI: Multiple replace strings are performed in the order they appear in the Substitute formula. Be careful as one replace could replace the other if not ordered properly.

The advantage of the Substitute function is the innate ability to do nothing if no title is found. Whereas the word function formula necessitate the addition of a Case statement and several PatternCount functions, the Substitute will do nothing if no title is found. The downside of the Substitute function is it's preciseness. Not only is it case sensitive but it also needs the exact spelling of the title for it to remove it. In addition, it requires more parameters for each title variation whereas the word function version simply removes the first word no matter what.

If you did have multiple spelling of the titles, you could easily add them into the formula. In this example, the space is critical in making sure "Mr" does not locate the "Mr" in "Mr.". Lowercase versions of the titles could also be replaced if necessary. The Substitute function is limited to 999 substitutions so you can go crazy, just not too crazy.

Substitute(

CUSTOMERS::name_first;

["Mr "; ""];

["Mr. "; ""];

["Mrs "; ""];

["Mrs. "; ""];

["Ms "; ""];

["Ms. "; ""]

)

Another Substitute function gotcha is locating any matching string and replacing it. For example, running the Substitute function on a text field might replace more than anticipated. A search for "he" with a replace of "she" would not only fix the gender but also change "there" to "tshere". A simple fix is to create a unique search for "he" with a space on either side. Consider yourself warned and test any Substitute solution in the Data Viewer first.

Determining which calculation approach works better is a process of comparing and contrasting the methods against the situation at hand. Sometimes the word functions will be better. Sometimes the Substitute function will work well. The key is analyzing the data instead of blindly applying a solution. There is always more than one solution to a problem so think before you jump.

It's also interesting to note how the word functions and Substitute functions differ in their approach to the solution. While the word functions overwrite the original data with a subset of the existing data, the Substitute function actually extracts the unwanted information by replacing it with a blank value. Think about how these two approaches differ as they can often make a difference when choosing a methodology for your solution.

What Next?

Now you just need to wait for your next parsing challenge! You may not remember every single detail from this article but if you bookmark this article, you'll be ready to go when duty calls. It also can't hurt to practice a little so jump at any chance you have to test out your skills.

Author:

John Mark Osborne

jmo@filemakerpros.com

www.databasepros.com

This blog is completely free. Please support it by clicking on one of the advertisers at the left side of the window or becoming a patron. Thanks so much!

|

|

Comments:

|

|

|

Mauricio Navarro

|

08/17/2018

|

|

|

Hi, John. Thank you for your response. To make sure, I see you added the "@" to the PostalState variable, but I still see the old field references in the blog: (Test::csz),CUSTOMERS::name_first, CUSTOMERS::city. I believe CUSTOMERS::city has City, State and Zip code to be parsed, right?, and CUSTOMERS::first_name has first and last name; but Test::csz? Not a big deal, just thought I mention it.

|

Response by: John Mark Osborne

|

08/28/2018

|

|

Sorry for the long response time. I just got back from vacation. I looked at the article again and only saw one reference to the Test table and I fixed it. Thanks for that! The references to the Customers table should all be correct. Let me know if you think otherwise. Appreciate your help.

|

|

|

|

Mauricio Navarro

|

08/15/2018

|

|

|

Hi. I'm always trying to learn from your blogs, but I always have my understanding doubts: first of all, I thought the data to be parsed was all in one field, but I see you mention several fields: CUSTOMERS::name_first,Test::csz, CUSTOMERS::city.

Second, the formula below doesn't show the use of @PostalState in the final calculation; so, how does it go or why @PostalState is stated if not used?

Let(

@PostalState = Right(Test::csz; Length(Test::csz) - Position(Test::csz; ", "; 1; 1));

RightWords(PostalState; WordCount(PostalState) - 1)

)

|

Response by: John Mark Osborne

|

08/17/2018

|

|

Sorry about that Mauricio. Both of your points are bugs in the article. I had some incorrect table and field references regarding your first point and forgot to add the at signs (@) on your second point. Thanks for saying something as I'm sure it confused a few others as well. I've changed the article. Add a new comment if it is still confusing. Thanks again and Happy FileMaking!

|

|

|

|

Steve M

|

06/27/2018

|

|

|

Another fantastic article. Data parsing is one of my favorite things to review on your videos.

Another article bookmarked for future and continuous reference. I wish there was a whole (book?) video just about this topic, similar to Chess books that show hundreds of position puzzles to solve :)

|

Response by: John Mark Osborne

|

06/27/2018

|

|

Thanks my friend. Funny thing is this blog started off as a book. I'm just about through all the content I had written for the book that I felt translated well to a blog. Time to start coming up with fresh stuff!

|

|

|

|

|