"No Holding Back FileMaker Blogging"

|

Navigation:

|

Support this site by clicking on a sponsor below or becoming a patron!

FileMaker 20 Video Tutorials

Become a patron of this FREE web site!

|

|

Recent Blogs:

|

Currency Formatting

|

Gathering Portals

|

Multiple Choice Picker

|

Popups and Pickers

|

Window Locking

|

|

Meta-Consulting

Meta-consulting is a service Database Pros offers to help other developers

over programming hurdles via GoToMeeting. Email John Mark Osborne or call John Mark at (909) 393-4664 to find out more about this service.

|

Fireside FileMaker Podcast

|

Quick Tip:

|

Coffee Cup

Do you see the coffee cup cursor icon a lot? This icon shows up in place of the arrow pointer when the host is busy and cannot process a request from a guest. This is different than the squiggly line cursor, which indicates the guest is sending information to the host. Using FileMaker Server as a host will probably eliminate the coffee cup icon, since it is multithreaded and can handle multiple jobs at once. This is different than multitasking. FileMaker Server actually does a little of each job that is queued. For instance, if a guest sorts a million records to preview a report, this may take a while to process. If another guest follows this long process with a simple find, they may get the coffee cup with regular FileMaker Pro as the host, since it can only process one guest at a time. What FileMaker Pro Server does is work on each task for a short time. Since the find takes a lot less time to process, FileMaker Pro Server will take care of it in one cycle and the user will never know another guest is processing a much more complex request. |

|

|

FileMaker 20 Video Tutorials

|

Fun Stuff:

|

Dull Boy

FileMaker files were stored in clear text prior to FileMaker 7 so, if you opened a FileMaker file in a text editor, you could see the phrase "All work and no play makes Jack a dull boy" in the header repeated over and over. No, it's not a virus! My understanding is the developers just needed to take up some space in the header and that's what they came up with. Today, modern FileMaker files are stored in Unicode so I'm not sure if the phrase is still there. |

|

|

Create a Complete Contact Manager

|

RSS Feed

|

|

|

|

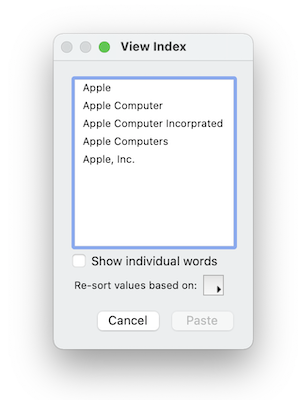

Collecting Unique Values

|

Level: Intermediate

Version: FileMaker 16

Category: Scripting

Tuesday, February 27, 2018 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Since Darren Terry published his infamous article on saving found sets for future retrieval in 1996, a lot has changed in FileMaker. The additions from the last thirteen versions of FileMaker offer many alternatives for amassing unique identifiers. The differences in collection methods mostly come down to speed but there are a few other considerations that shouldn't be overlooked. However, restoring records remains the same, relying on the humble Go to Related Record (GTRR), and will not be discussed in this article. I also won't be able to point specifically to the originators of the additional methods for collecting unique values as they have been lost in the vastness of time. For all I know, I was the first to come up with some of these techniques.

Also, it's wise to read the first two articles in this series before starting this article:

1) Preserving Found Sets

2) Saving a Found Set

Why Serial Numbers

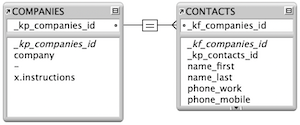

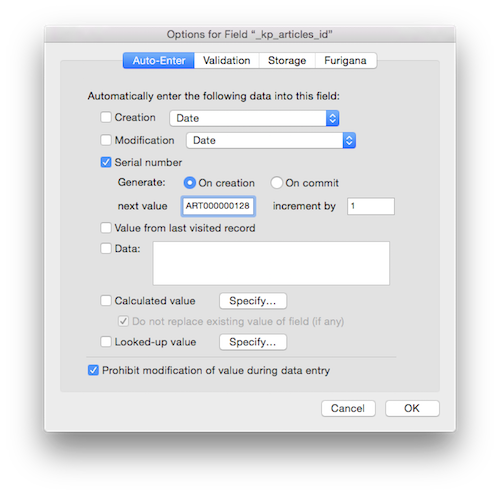

Let's start by talking about why serial numbers are the best unique identifier for saving and restoring found sets of records. There are three features for uniquely identifying a record: auto-enter serial numbers, Record IDs and UUID (Universally Unique Identifier). Record IDs are built into FileMaker and are automatically attached to each record. They were originally introduced for web deployment to identify a record without a serial number, but can be captured using the Get(RecordID) function for desktop or iOS deployment. UUID values have to be assigned with an auto-enter calculation using the Get(UUID) function. The UUID feature was initially introduced for distributed systems, where remote users don't always have an internet connection. Rather than adjusting each local copy of FileMaker with a different serial number range, UUID randomly generates a unique value. This allows locally stored data to be synchronized when there is a connection to the FileMaker Server.

All three of these features can be used connect two tables to create a relationship. However, I personally find serial numbers a better choice. For starters, serial numbers take up less space than UUID, which can be quite verbose containing 36 values, as well as four hyphens (e.g. 10D4126D-7EAD-4369-9CF6-306336B4E1FD). Compared this to a serial number which starts off with a single digit and might grow to ten digits in a very large database. UUID values are frighteningly large right from the start and take up more space, especially when assembling them into a single field.

Since UUID values contain text and number values (and, hyphens), they can only be used in text fields. This is not a huge deal but may prevent sorting by the unique identifier in any meaningful way. With serial numbers, I can make them a text or number field. I can sort them in reverse creation order if I so decide or even search on a range of values. UUID values are random with absolutely no meaning in regards to record creation and can even repeat in theory... that's a scary thought (insert scream emoji here).

When you start comparing serial numbers and Record IDs, they seem almost identical since both are sequential. Unfortunately, you have no control over Record IDs. As mentioned, they are assigned by FileMaker and cannot be changed. If a record is deleted, that Record ID can never be retrieved. On the other hand, serial numbers can be manipulated manually, through import or by script. You can even determine how serial numbers are constructed by adding leading zeros or characters. And, what if you want to import the data into a clone or a backup? All those Record IDs will be lost, destroying your relationships. Think about it. Record IDs are assigned by FileMaker so a new database means new IDs, even if the records are imported.

So, what does all this have to do with constructing a return-separated list of serial numbers? Well, that multi-key needs to be used in a relationship to restore records so I'd rather use a unique identifier that's small, flexible and reliable. Reserve Record IDs for the web and UUID for distributed systems. Serial numbers are really the best approach for defining a primary key in most scenarios and especially for the technique being discussed in this article.

Alternatives to Copy and Paste

So... what's wrong with the Copy All Records step, as covered in the previous article Saving a Found Set? Simply put, it destroys the contents of the clipboard. What if a user manually copies a value to the clipboard and you just destroy it? That's right, they'll probably be irritated. I mean, can you think of any professional application on the market that warns you that the contents of the clipboard may be destroyed when using their product. You can't think of any because there aren't any. And, your FileMaker solution should be no different, even if it only has one feature that destroys the clipboard.

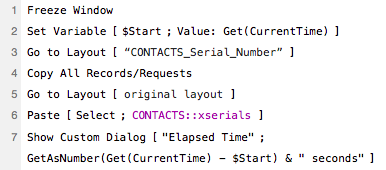

What the Copy All Records has going for it is it's easy to implement and wicked fast. Even a found set of tens of thousands of records are copied and pasted in less than a second. If you don't believe me, download the example file that accompanies this articles and test the speed. It does require a dedicated layout but that's not too much clutter, right? But, that clipboard destruction is a biggy for me! That's why we're going to cover alternative methods. Each has an advantage and disadvantage so pay attention to each approach. Even if you never use a technique presented here, it may spur some ideas for a different solution. Don't be a one trick pony.

SIDE TIP: Each of the scripts shown in this article contain a speed test using a Set Variable step to start the stopwatch and a Show Custom Dialog step to display the elapsed time.

Global Loop

Every developer knows how to loop through records. Unfortunately, it can get slow but sometimes it's the only way to get done what you want to accomplish. Luckily, a global loop isn't the only alternative to Copy All Records but it's important to mention this approach since there are a few advantages. First, it's very easy to implement. If a developer learns any intermediate level scripting, it's likely to include record looping. And, if the average number of records gathered is less than one hundred, the speed degradation of this archaic approach won't be noticeable. It's only when you get into thousands of records that loops get noticeably slow when compared to other methods.

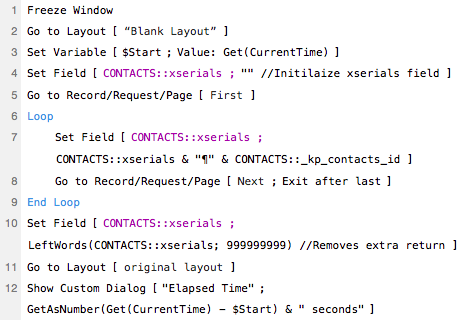

Another advantage is there are no need for additional layouts. Just loop through the current layout. However, a complicated layout with related fields and unstored calculations can slow down a loop tremendously, even with the Freeze Window script step. Therefore, it's a good idea to create a blank layout based on the table you want to loop. Blank means a theme of Minimalist, no fields, no graphics, no nothing! This will speed up the redraw as each record is visited. Again, even if Freeze Window is implemented.

Variable Loop

Amazingly, writing values to a variable instead of a global field is significantly faster. As much as three times faster with my testing of sets of records up to 50,000 in a single-user setup. In a multi-user scenario, speed can be exasperated even further depending on the connection. After the loop is complete, write the variable value to a global field or wherever you want to store the return-separated values. Same process as a global loop, just faster.

The global and variable loop methods both have a Set Field step after the End Loop step that's commented as "removing extra return". While an extra return at the beginning or end of a return-separated list won't make a difference with a relationship, it could in other scenarios such as parsing. Therefore, it's a good idea to remove it in the name of good practice so it doesn't come back to bite you later.

There are many ways to remove extra returns but in this case I chose the simple LeftWords approach. Since I know the serial numbers do not contain any word separators, I can count on the return character separating each value into one word. Based on the words algorithm, word separators not surrounded by a word on each side are dropped. For example:

LeftWords(",John Mark Osborne."; 10)

Results:

John Mark Osborne

Even though my name was preceded by a comma and followed by a period (both word separators like the space and the return character) they are dropped because they are not surrounded by words.

I could have used more precise calculation mechanisms like:

Left($Serials; Length($Serials) - 1)

However, this only takes care of the return at the end. I find LeftWords more versatile, removing returns from the beginning and end of a list. You just need to make sure your data doesn't contain any dangling separators that you wish to keep.

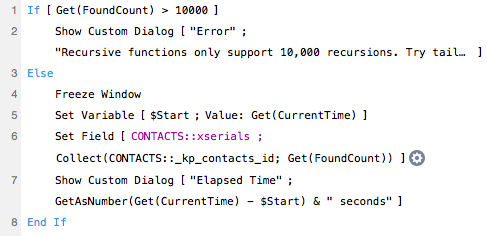

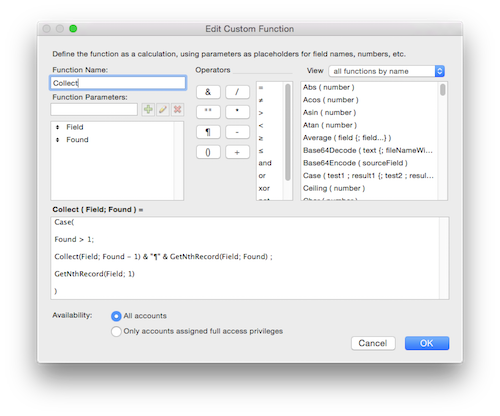

Custom Functions

One way to capture return-separated lists from the found set without a script is to use a Custom Function with recursion. It's a lot more complicated than a loop but doesn't require a script since it's completely calculation based. Of course, you can use it in a script with a Set Field if you want, as we've done with the example file that comes with this article. Because the Custom Function does all the work, the script is only one line.

When you dig deeper into the Custom Function, you'll find a complex recursive formula, especially if you've never written a recursive function before.

The basic premise is pretty simple. Use a conditional statement to keep performing the same formula over and over until some criteria is met. Each time the formula iterates, it concatenates the accumulated result of the formula to the newest result, essentially amassing all the serial numbers from the found set. The formula is reiterated by including the name of the Custom Function in the formula itself, each time calling itself with the found set parameter decremented by one. When the Found parameter reaches zero, the Case statement test is false, the loop exits and the final result is returned.

The big disadvantage of recursion is the limit of ten thousand iterations. This ceiling prevents an endless loop that might exhaust available memory and crash FileMaker. So, if you try to recurse more than the maximum, the result becomes a question mark. Tail recursion gets around this limitation by using a parameter to hold the result rather than stacking it in memory. There's still a limit to prevent an infinite loop but you now get fifty thousand iterations, represented by the maximum number of distinct recursive calls of five different custom functions (5 * 10,000 = 50,000).

List Summary

The newest kid on the block is the "List of" Summary field, introduced in FileMaker 13. It also has a simple script but unlike the Custom Function, constructing this script and field combination is only a few clicks. All you need is a Summary field and a script with a Set Field. It couldn't be easier! If you want to see the single script that sets the List of Summary field, feel free to open the example files. All that is shown below is a definition for the List of Summary field.

What's the Best Approach?

There really is not best approach. Yes, I tend to steer towards the List of Summary field approach because it's super fast and easy to implement. This saves my client's money and gives them the best experience, even with large found sets. If you have a version of FileMaker earlier than 13, I would sway towards Copy All Records for large sets and the variable loop for smaller sets. But, it really depend on your unique scenario what approach you choose.

I'm Not Done Yet!

There's one more article in this series. I want to cover alternatives to restoring records such as Snapshot Link and find criteria restoration. I think this is a good end to this series of articles and will provide some scripting and calculation meat for more advanced readers to chew on.

Author:

John Mark Osborne

jmo@filemakerpros.com

www.databasepros.com

Example File (available to patrons at $5.00 per month): Download here if you are a patron

This blog is completely free. Please support it by clicking on one of the advertisers at the left side of the window or becoming a patron. Thanks so much!

|

|

Comments:

|

|

|

Shane Nychyporuk

|

09/07/2019

|

|

|

Hi John Mark, thanks for a great set of articles... I never thought much about how important it is to preserve the found set....

Regarding your reason for not using copy and paste, am I missing something or would it be possible to simply do a paste into a global field first to preserve whatever is currenly on the clipboard and then copy it back to the clipboard at the end of the scripting? And of course we would empty the global field again to reclaim any lost memory space..???

|

Response by: John Mark Osborne

|

09/10/2019

|

|

Glad you are enjoying the article series. You could copy and paste the contents of the clipboard into a global container field but you are likely to change the format of the contents. Better just not to mess with the clipboard. In 25 years of development, I've never needed to use the clipboard to move data. Better to just use the other available tools.

|

|

|

|

|