|

|

|

|

Parsing for Features

|

Level: Advanced

Version: FileMaker 17

Category: Calculations

Tuesday, July 24, 2018 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Data parsing isn't just for manipulating data into a preferred format. You can also create features that weren't otherwise possible with standard FileMaker tools. That's one of the things I love about FileMaker. Instead of giving you a one-dimensional menu item to support a specific need, like Microsoft, FMI gives you multidimensional tools. By combining two or more tools, you can can create a new capability in a FileMaker solution. One of the most overlooked tools are the text and value functions. I aim to demonstrate several examples in this article that highlight how parsing can make wine out of water.

This is the third in a series of parsing article so make sure you don't miss the first two: Data Parsing and Parsing A Web Form.

New Requests Made Easier

One of the toughest things to explain to the users of my solutions is how a new request works. They kinda get it when I explain it to them but then they don't use the feature very often so the knowledge melts away into the daily grind of the work day. They really shouldn't have to understand how FileMaker works IMHO. If they are going to perform an action often enough, it should be scripted into an interface that is completely intuitive. For me, scripting new requests to sit behind the scenes is a prime example of how to make FileMaker easier to use for casual users.

This example has been greatly simplified to emphasize the foundations of the technique. It's your job to figure out how it fits into your more complicated solution. All I can tell you is I use this technique in just about every single one of the custom FileMaker solutions I create. And, it's not that hard to integrate into a solution. I think it took me all of ten minutes to create the example solution from scratch which included a popover, two fields and two scripts. Besides, this data parsing method will save you tons of phone calls from users asking you how to create a new request. I think the reduced support more than makes up for the development time.

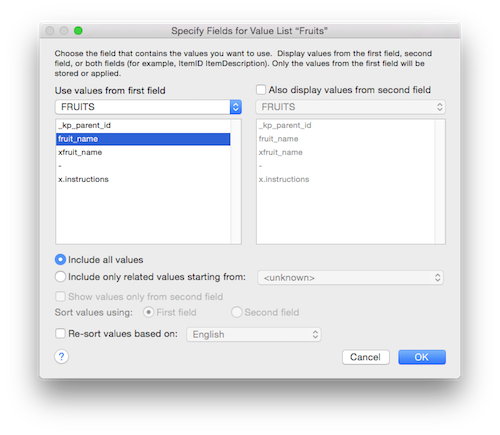

The idea behind this example file is users need to find multiple fruit records. They could go into find mode and create a new request for each fruit they want to find. Or, they could use a script with check boxes and a script to make the process a no-brainer. As mentioned, you need two fields. You probably already have the first field as it's the focus of the search. In this example, we have a database of fruits so the field is titled "fruit_name". All you need to create is a global field counterpart called "xfruit_name", "gfruitname", "zFruitName" or whatever naming conventions you employ. Place the global field in a popover and format it as a check box using the contents of the "fruit_name" field.

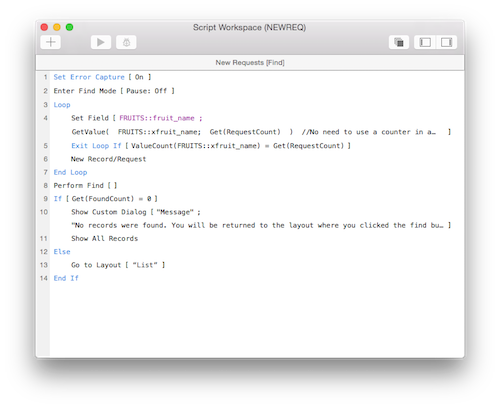

The hardest part of the feature is the script and it's not really that hard. All you need to do is loop through the values in the check box and create a new request with each one. Check box formatted fields store multiple values in a return-separated list so it's easy to parse using value functions.

The first step is to turn on error capture in case no records are found. Enter find mode and then start the loop. Since global values are available in find mode, there is no need for a special bridge from browse to find mode. All you need to do is grab each value using the GetValue function. The hardest part, and really not the hard, is incrementing the value to extract for the second parameter of the GetValue function. Most people use a counter in a variable. This works fine but I find it more efficient to use the Get(RequestCount) function. Each time you create a new request, the Get(RequestCount) will increment just like $Counter + 1. You can even exit the loop by comparing the number of values in the global field to the number of requests.

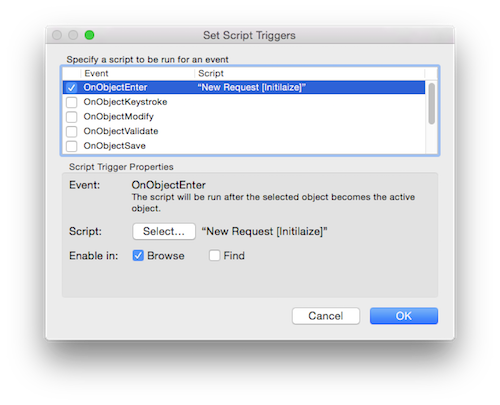

Once all the requests are created, perform the find and test for no records found. See, that wasn't that hard. Once you learn the foundations of this technique, applying it to your more complex project will be a breeze. There's one last tidbit, however. I like to initialize the global field each time I open the popover so I add a script trigger that runs OnObjectEnter when the popover is activated.

The script is hardly worth mentioning since all it does is set the global field to nothing using the Set Field step. What I'm more concerned you learn is how important this step is. Don't leave the remnants of developer testing in single-user mode sitting in the global field so users have to uncheck the same options every time they use the feature. Always think of the user!

SIDE TIP: The default value for a global field is the last value the developer left in the field before it was uploaded to the server.

Strange but True

I've used this technique twice in my career but the knowledge gained from it has seeped into hundreds of other programming jobs. So, don't try to understand how you would use this exact technique. Rather, understand that it accomplished a task. Think of it as weight lifting for your FileMaker brain. While weight lifting builds your muscles, it's not really the activity you are trying to learn as much as the benefits you gain from that activity.

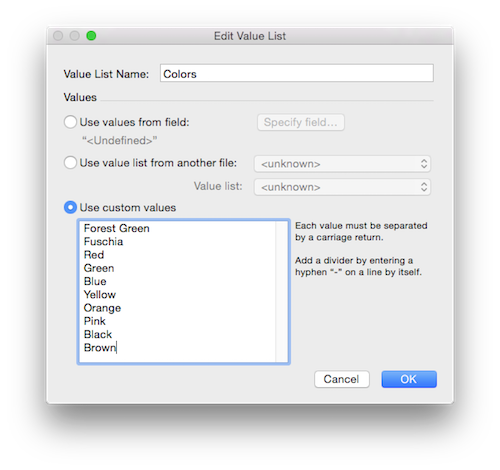

Anyhow, the idea behind this feature is to figure out the number of the choice from a popup menu. Let's say you have a popup menu containing the following values:

Determining the order of the values is not easy. Seems like it should be but it's not. The idea is to grab the values from the value list using the ValueListItems function.

ValueListItems(Get(FileName); "Colors")

FYI: Use the Get(FileName) function in the first parameter of the ValeListItems function, instead of hard coding the file name, in case your file name changes.

The result is a return-separated list of values in the order they display in the popup menu.

Forest Green

Fuschia

Red

Green

Blue

Yellow

Orange

Pink

Black

Brown

The next step is to locate the selected value in the list of all the values. The Position function returns the character position counting from the left of the field. If "Fuschia" is selected then a 14 is returned, representing the beginning of the search string (the value selected from the popup menu). Don't forget to count the invisible carriage return.

Position(@VLI; @Field; 1; 1)

The Position location is then passed to the second parameter of the Left function.

Left(@VLI; Position(@VLI; @Field; 1; 1))

Since the Position function returns a number associated with the beginning of the string, when it is combined with the Left function, the result looks like the following if "Blue" is selected from the popup menu:

Forest Green

Fuschia

Red

Green

B

The final step is to count the number of values in the truncated list. Here's the entire formula.

Let(

[@Field = "¶" & popup & "¶";

@VLI = "¶" & ValueListItems(Get(FileName); "Colors") & "¶";

@Location = Left(@VLI; Position(@VLI; @Field; 1; 1))];

ValueCount(@Location)

)

You'll notice in the complete formula above that carriage returns are added to the beginning and ending of the popup field and the ValueListItems result. The reason becomes clear if you remove the returns and choose "Green" from the popup menu. Instead of locating "Green", the Position function locates the "Green" in "Forest Green" since it's the first "Green" it comes across. Adding returns to the beginning and ending of the value makes it more unique.

Multi-Selection

I don't use this technique very often anymore cause I firmly believe in simplicity. While this technique isn't that complex, I try to steer my clients towards the basics of find, list view and form which don't require any fancy footwork with scripts, calculations and relationships. Even if you never use this application of text parsing, it teaches you how to work with return-separated lists. Return-separated lists are so common in FileMaker, manipulating them is essential to a career in development.

SIDE TIP: Almost every function that returns multiple values, does so in a return-separated list. With the support of the Value and Text functions, manipulating a list of values can be accomplished in just about every manner conceivable.

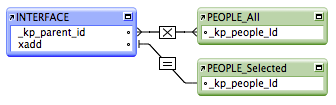

In the chosen example, a portal of record choices are shown and the user can add them to another portal. Think of it as a way to add multiple related record choices to a parent record while viewing your progress. In the downloadable example at the end of this article, a list of names is presented in a portal via a cartesian product relationship. Clicking a name adds the primary key to a global field. Each primary key is separated by a return, storing the values as a return-separated list. The global field is then used in a relationship to display the selected choices via a multi-key relationship.

A cartesian product relationship (X) shows all records from the relationship, regardless of the key fields. A Multi-Key relationship is a term that references a key field containing multiple values. Each value is separated by a return, allowing a relationship to view the values as separate matches.

Adding a primary key value to a global field in a return-separated list is fairly easy. All you have to do is use a technique called Append along with the Set Field script step.

Set Field [INTERFACE::xadd; INTERFACE::xadd & "¶" & PEOPLE_All::_kp_people_id]

FYI: The Append technique is a method for concatenating a new value to a field. Normally, the Set Field step replaces the content of the target field with the result of the formula. However, if the target field is referenced in the formula, the original value is retained.

This works great in most cases but could be better. First, without duplicate checks for primary keys, it's possible to load up the global field with a lot of superfluous data. All you have to do is add a simple Case statement to the formula portion of the Set Field step.

INTERFACE::xadd &

Case(

not PatternCount("¶" & INTERFACE::xadd & "¶"; "¶" & PEOPLE_All::_kp_people_id & "¶");

"¶" & @ID

)

Carriage returns are concatenated at the beginning and end of the global and primary key field to make sure the PatternCount finds what the correct value. For example, if the script searches for a "1" in a global field that contains "10¶23¶14", it's going to find "1" twice. If the script searches for "¶1¶" in a global field that contains "¶10¶23¶14¶", it doesn't find "1", allowing the conditional to add the new value.

A few more pieces should be added to guarantee that a clean list of value is maintained. For instance, when the first value is added to the global field, an extra return is left at the beginning. In addition, extra returns are added to the beginning and end of the global field due to the uniqueness search introduced in the previous paragraph. While the extra returns won't affect the multi-key relationship, it could cause unexpected results when parsing the vales. The extra returns can be removed many ways but I'll go for the easiest approach in my mind.

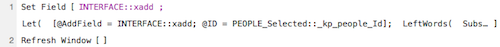

Let(

[@AddField = INTERFACE::xadd;

@ID = PEOPLE_All::_kp_people_Id];

LeftWords(

@AddField

&

Case(

not PatternCount("¶" & @AddField & "¶"; "¶" & @ID & "¶");

"¶" & @ID

);

999999999)

)

The Let function has been added to make the formula easier to read and update. However, the newest addition is the LeftWords function. By providing LeftWords with a large number like 999,999,999, all values are grabbed. However, any leading or trailing word separators not surrounded by words are lopped off. In order to use this approach, a knowledge of the data is required lest values are lost like periods, commas, spaces and other word separators. Since the primary keys contain only numbers, this return trimming option is completely safe and easy to implement.

FYI: A return-separated list should have a carriage return between each value but no return at the beginning or end of the list

Deleting values from a return-separated list is a little bit harder but is softened by having a properly formatted list. The easiest approach is to use the Substitute function.

Let(

[@AddField = INTERFACE::xadd;

@ID = PEOPLE_Selected::_kp_people_Id];

LeftWords(

Substitute(

"¶" & @AddField & "¶";

"¶" & @ID & "¶";

"¶");

999999999)

)

The same technique you learned for adding a value is employed for removing them. In order for the Substitute function to locate the exact primary key to be removed, carriage returns are added to the beginning and ending of the global and primary key fields. When a value is found, it is replaced with a carriage return to keep the surrounding value separated. The LeftWords technique is also utilized to remove the extra returns that get added to the beginning of the global field.

A Refresh Window step was added to prevent redraw issues without requiring a Commit Records/Requests step. A Refresh Portal step was attempted but remnants of the deleted row remained.

Share your Parsing Stories

As mentioned, I use parsing techniques all the time to create features. I chose these two examples because they are easy to explain and have a wide appeal. I'm sure the readers out there have tons of examples so please share them in the comments section below. Happy FileMaking!

Author:

John Mark Osborne

jmo@filemakerpros.com

www.databasepros.com

Example File (available to patrons at $5.00 per month): Download here if you are a patron

This blog is completely free. Please support it by clicking on one of the advertisers at the left side of the window or becoming a patron. Thanks so much!

|

|